Adding Artificial Intelligence to your apps can streamline your workflow, safe-guard your users, surface content and enhance your business intelligence.

It’s common for mobile apps to offer some kind of image capture functionality. Indeed, one of the decision points on whether you even need an app, or a responsive website is fine, is whether you can use of the unique capabilities of a mobile device, such as using the camera. So whether you have a social media app, capturing photos from the public, or an enterprise app capturing site photos from workers in the field, if you’ve got an app it’s likely you’ll be capturing photos.

However, offering this functionality in your app often leads to potential issues, or missed opportunities, that are often overlooked.

What happens to these images after they are captured? No doubt they are uploaded to a backend system – probably into the Cloud or some back office line-of-business system, and there they’ll languish as image files that your system knows little about, eating up storage space, while potentially rarely being viewed again. What’s more, if you’re capturing images from the public, how can you be sure the content is suitable, or even a liability?

Traditional content moderation workflows can be laborious and unable to scale, whilst ignoring this problem can lead to compliance issues if you don’t know what content is available on your system. This month the UK Government, through the Centre for Data Ethics and Innovation (CDEI), published recommendations that social media platform be more accountable for the content they publish. The pressure is firmly on online platforms to take more responsibility for the content they host and publish.

Furthermore, regardless of the origin of the content, or it’s intended audience, what does your system actually know about the images? You’ll know there actually are some images, by the fact they exist in your database. But what is the content of the photo – is it indoors or outdoors, does it contain identifiable faces, objects, brands, etc. Do they contain adult, offensive or sensitive content? Can your search feature surface relevant images based on search keywords? If the answer to all this is a resounding no, then you may want to consider applying the Artificial Intelligence field of Computer Vision to your platform.

Using Computer Vision, we can analyze an image and derive from it information about it’s content, describing it’s general characteristics (e.g. indoor, outdoor, people, places, etc), extract text from the image, extract details about identified objects in the image, and perform classification of unsuitable content (e.g. adult content, identifiable photos of people, etc). We can then use this structured meta data about the images content to make decisions about its suitability, depending on its intended audience and usage, and persist the meta data in the backend system, to assist with content indexing, allowing relevant images to be surfaced by your search technology, and to be used in data analytics and business intelligence.

The use cases of this technology are far reaching, but here’s a few examples:

-

- Block your users from uploading adult or other unsuitable content

- Reject poor quality images – if the Computer Vision algorithm can’t derive any useful information from the photo, it’s likely a human can’t either

- Only allow images that represent ‘whitelist’ subject matters – you might have a specific application, for example a social platform for buying/selling used vehicles, then you’ll only want to allow photos that contain clear pictures of vehicles or vehicle interiors

- Extract meta data describing the contents on the image to assist with search indexing and discoverability of imagery

Fortunately, you don’t need to reinvent the wheel or hire a team of Artificial Intelligence PhDs to solve this problem, there’s a number of Cloud services available that are simple to integrate with, blazingly fast, and uncannily accurate. For example, Amazon Rekognition and Microsoft Azure Cognitive Services.

Amazon’s and Microsoft’s offerings are both PaaS platforms, offering platform-agnostic web services for integration. Simply throw your image files at it, and you’ll get the analysis results back in an instant. They both charge based on consumption, charging fractions of a penny per image analyzed, with free tiers for proof of concept development.

Computer Vision in action

This example shows our obedient model was identified as a poodle.

The Computer Vision algorithm has given us information about the position of the puppy within the original photo.

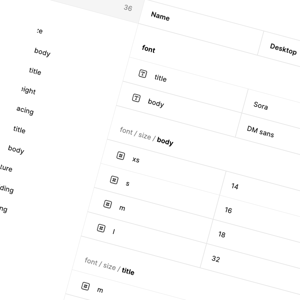

Let’s take a look some of the raw data we get back from the Computer Vision web service – in this example, we used Azure’s Computer Vision service:

{ "Objects": [ { "rectangle": { "x": 192, "y": 79, "w": 469, "h": 859, "object": "poodle" }, "parent": { "object": "dog", "parent": { "object": "mammal", "parent": { "object": "animal", "confidence": 0.909 }, "confidence": 0.906 }, "confidence": 0.902 }, "confidence": 0.526 } ] }

The Objects data structure tells as about identified objects within the photo – we can see the bounding box of the object relative to the full image, and what the object was classified as. We can see it’s a poodle, with a series of parent categories getting more generic – so we also know it’s a dog, an mammal and an animal. All useful tags for classification and search terms.

Description

{

"tags":[

"outdoor",

"dog",

"animal",

"mammal",

"brown",

"grass",

"sitting",

"small",

"park",

"green",

"close",

"bench",

"standing",

"bear",

"field",

"white"

],

"captions":[

{

"text":"a close up of a dog",

"confidence":0.9568615

}

]

}

The description data structure tells us general information about the image as a whole. It’s telling us, amongst other things, it’s an outdoor picture of a brown dog.

This is just a subset of the data we get back – the full data structure contains much more detail, such as confidence scores for each individual tag, adult content ratings, plus more. However, we hope this gives you an indication how Computer Vision analyses and classifies images, and the quality and detail of the data returned.

Like to know more?

Here at Shout we build full stack mobile apps – everything from your iOS, Android & Xamarin apps, through to backend Cloud services, data integration, and data analytics. Find out how Artificial Intelligence can be integrated into your app stack to streamline your workflow, safe-guard your users, and enhance your business intelligence.