When an AI produces a polished response, we applaud the result and skip the working. Yet, credibility, whether human or artificial, rests on being able to trace each step that led to it. Reasoning AI makes those steps visible.

But human cognitive processes don't work like this. Humans don't analyse datasets en masse and arrive directly at a conclusion. Instead, we use steps of inference, logic, and reasoning to arrive at these conclusions. To truly behave like human intelligence, artificial intelligence must also do this, hence, reasoning AI.

The rise of this approach suggests that the future of AI is going to be more process-based, rather than focused exclusively on arriving at a conclusion as fast as possible.

In this blog, we will examine what reasoning AI is, how it works, and why it's so important.

Understanding reasoning AI

Reasoning AI is exactly what it sounds like. Artificial intelligence gathers information and then applies reasoning and logic to reach its conclusion. More recent developments have expanded this, breaking the process down into steps and using inference and interpretation along the way. In short, the model behaves less like a parrot and more like a student who shows every line of thought before writing the answer.

AI systems are now taking a more sophisticated route to their conclusions and demonstrating the process that led them there.

How does reasoning AI work?

To understand how reasoning AI works, we've first got to understand how typical generative AI works. Generative AI is essentially a word prediction solution, it analyses vast swathes of data and generates word sequences that most closely represent the inputs it is provided with.

So if you ask generative AI, "How do I grow begonias in my garden?" the solution examines the dataset, recognises word patterns, and generates a response based on the most appropriate sequence of words.

While this is an impressive demonstration, you could argue it's not really that intelligent at all. The solution does not need to understand the concept of a begonia, a garden, or even the verb "to grow." It just needs to identify the sequence of words with the highest probability of providing the right answer, it's number crunching, essentially.

Retrieval-augmented generation (RAG) improves coverage by searching a document index and then generating an answer, but the reasoning still happens in one opaque burst. Retrieval-augmented reasoning (RAR) feeds the retrieved snippets into every intermediate step, allowing you to inspect and challenge each inference along the way.

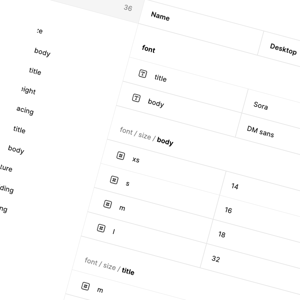

IBM's definition of reasoning AI shows that the technology is still simple but operates in a different way from standard AI. There is a knowledge base and an inference engine. The knowledge base is where the data is held, and the inference engine is where the logic and reasoning occur. AI solutions then break problems down into logical steps and then move through this roadmap en route to the conclusion.

Although large language models do predict the next token, the statistical training embeds syntactic and semantic patterns that amount to real-world knowledge. This enables them to manipulate concepts, not merely string words together.

Why is reasoning AI important?

The reasoning model is not just the future of AI, it's already here. In fact, reasoning AI has become the latest battleground in the artificial intelligence arms race. OpenAI launched its o3 reasoning model on 16 April 2025, while DeepSeek rolled out the V3-0324 upgrade on 25 March 2025.

But why? What's the big deal? Well, there are a few factors that could make AI reasoning a game-changer:

Improved insight from logical steps

Back in school, you were probably asked to show your work while tackling a math problem in an exam. This is because you needed to show you understood what was going on, rather than simply writing an answer. You might have got points for getting the correct process even if your answer was wrong.

This is because there is value in the process. AI systems that demonstrate reasoning are inherently more valuable to the user because they offer a clearer picture of the topic at hand. Applying nuance and inference also enhances this; reasoning AI has the capability to point out possible deviations and even interpret data based on prior understanding.

Efficient machine learning

In the begonia example above, the generative AI offers a reasonable answer for how to grow begonias in your garden. From a machine learning perspective, this is only going to be useful if someone then asks the exact same question. If the input changes, for example, "How do I hybridise my begonias?" then the original input is of little use. The AI still needs to start from scratch.

By breaking the input down into blocks of reasoning, machine learning systems can develop their own knowledge. There will be a pre-existing understanding of what a begonia is and how it grows, and this can be used as a basis for the second input.

Procedural adaptability and increasingly sustainable AI

If someone asked you to name Henry VIII's third wife, you wouldn't need to read the complete history of the Tudor dynasty to arrive at the name Jane Seymour. But if you were instead asked to explain the international maritime economy in the 16th century, you'd need to expand your research. In other words, we cut our cloth to suit our means when it comes to cognitive processes.

This is a bit of a problem for existing AI. Generative AI tends to take a broad-brush approach to any problem it's faced with. Solutions examine huge volumes of data, whether they need to or not. With reasoning AI, this is not the case, the solution breaks the problem down and applies the necessary logical processes for each.

One result of this is increasingly sustainable AI. The International Energy Agency estimates a single ChatGPT request at ≈ 2.9 Wh, about ten times the 0.3 Wh that Google reported for an average search back in 2009. Both figures are approximate, drawn from separate studies a decade apart.

While both figures are approximate and depend on hardware, data-centre efficiency, and workload, they still suggest an enormous difference, and it's going to be difficult to maintain sustainability as AI usage volumes increase. With a reasoning approach, the future of AI becomes more adaptable, using only the energy it needs to complete a task, no more, no less.

Reasoning progress is already measured on dedicated benchmarks such as GSM8K, MATH, and ARC-Challenge. Techniques like chain-of-thought and tree-of-thought prompting reveal every intermediate step, giving auditors a clear view of where an answer may go wrong.

Reasoning is going to be inherent to the future of AI

In many ways, reasoning AI is not really up for debate. We can't have true artificial intelligence without reasoning. After all, logic, reasoning, and a step-by-step approach to problems are fundamental to intelligence. A smart person isn't the one with all the answers, it's the one who knows how to find the answers they don't know.

Until recently, this more elevated form of intelligence was beyond our grasp. Now, however, with major players like OpenAI and DeepSeek launching their own reasoning AI tools, it's very much within our grasp. It's what we do with this technology and how we make full use of its potential that will dictate how far we can go with it.