At some point in the 1960s, musicians stopped singing about holding hands and finding a thousand ways to say 'I love you' and started doing something very different. They took sojourns in India, delved deeply into Eastern Philosophy and meditation, and expanded their musical palette with new instruments and forms. They took listeners on a magical mystery tour, and the result is some of the most interesting popular music ever recorded.

Psychedelic hallucinations are great for pop music. However, not so much for technology. So when AI starts taking us on its own magical mystery tour, the results aren't quite so joyous. While some AI hallucinations are amusing, plenty of them are pretty annoying, and some are actively dangerous.

So let's dig a little deeper into what's going on here and find out what an AI hallucination actually is and what we should do when we encounter one. When AI hallucinates, should we just Let It Be? Or is this simply showing too much Sympathy for the Devil?

What is an AI hallucination?

For those '60s psychedelic bands, their weird and wonderful sound was a leap into the unknown. For an AI LLM, it's something similar; it's a move into an area where the solution is unsure of, and the results are often just as weird, though certainly less wonderful.

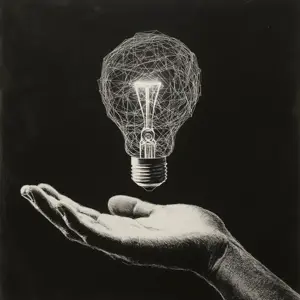

IBM has likened AI hallucinations to pareidolia, the human tendency to see faces everywhere we look. We might look at the bark of a tree or the surface of the moon and see two little eye-like holes, a diagonal line a little like a nose, and a curved line representing a mouth. Our brain then fills in the gaps, and the face begins to look real and vaguely human.

While these hallucinations feel eerie, they are really just representations of how our mind works. We can recognise thousands of real faces, and our brains are geared to register the differences between each one. Pareidolia is just our brain trying to apply this facial recognition data to the wrong context.

It's the same with AI LLMs. Pattern recognition is the LLM's 'thing', and so it attempts to find these patterns in places where they don't exist. It jumps to a conclusion and delivers an output based on this conclusion. At some point in the process, the AI has made a mistake, if it hasn't caught this mistake, then the error becomes part of the data that the output is built on, and a hallucination occurs.

AI hallucination examples in action

When John Lennon wrote an entire song based on the text of an 1843 circus poster, or when The Doors recorded a track inspired by William Blake poetry and medieval Irish literature, no one batted an eyelid. Okay, plenty of people did bat an eyelid, but these recordings have still gone down in history as classics of their genres.

We can't say the same about AI hallucinations. Here are some examples of AI hallucination in action: instances where AI became highly inaccurate. I don't expect these to have the same cultural impact as The Beatles or Jim Morrison.

Magical Mystery Tour

- Google Bard, Feb 2023, claimed the James Webb telescope took the first exoplanet photo (it did not); Alphabet stock fell 7 %.

- ChatGPT, Apr 2023, invented a world-record “walk” across the English Channel, even listing an incorrect athlete and time.

- AI mushroom field guides, Aug 2023, self-published ebooks told foragers to “taste-test” unknown fungi, a potentially lethal instruction.

- Stable Diffusion job images, Jul 2023, returned only people of colour for “dishwasher worker”, exposing bias in its training set.

- The 241542903 fridge-head meme, 2009 → 2023, SEO prank led models to associate a random number with “head in a fridge” imagery.

Does the Magical Mystery Tour need to end? Understanding why this is a big deal

The '60s psychedelic era has made plenty of comebacks, but all that original weirdness was finished by the mid-1970s. Punks desired something more urgent and authentic, disco aficionados wondered what was wrong with all that lovey-dovey pop in the first place, while the burgeoning electronic scene took music in a wholly different direction.

Is it time that AI hallucinations went the same way, only without all the nostalgic comebacks? Unfortunately, yes. As we've seen from those AI hallucination examples above, these things can cause all sorts of trouble, from losing money for companies to reinforcing biases and giving people the wrong information, even putting lives in danger.

At a fundamental level, AI hallucinations cause two very important types of damage. They erode trust in the technology at a time when AI already has many critics. And they make it difficult for AI to be a genuine assistant to human society. If AI is going off on all kinds of different trips, how are we supposed to trust it to deliver when we really need it to?

This is why eradicating AI hallucinations is going to be key to wider AI adoption and development over the coming years.