In 2025, data gravity has become a significant concern in enterprise AI. This phenomenon has an impact on CIOs, CTOs, CDOs, Heads of Engineering, product teams, and end users.

In this article, we examine what data gravity is and why data gravity is a problem in enterprise AI. We also outline the best practices and practical steps to overcome the problem of data gravity, and to reduce its impact.

What do we mean by data gravity?

As datasets grow, they attract applications, services, and additional data. This happens because larger datasets are difficult and expensive to move, and so it is just not feasible to move these structures to the point of processing.

Instead, teams move their services and smaller structures to the data itself, as this is a far easier option. Over time, more and more of these applications and services move to the larger data structure. Enterprise AI users refer to this effect as data gravity.

Why data gravity matters to enterprises

Data gravity is not an unexpected issue. As we have seen above, teams follow the path of least resistance, moving their assets to the data rather than vice versa, so gravitational pull is the natural outcome of this. So what is it that makes data gravity such a problem?

Below, we've listed a few of the key issues of data gravity, with a specific focus on enterprise artificial intelligence.

Latency and user experience

Pulling large datasets across regions or networks increases query times and model response times. This severely affects latency budgets, SLAs, and real-time use cases.

Interoperability and integration

A centralised data lake or warehouse can still behave like a silo relative to other regions, vendors, or operational systems. This makes data interoperability and integration difficult to achieve when data gravity grows too great. Incompatible schemas, insecure workarounds, and ad hoc extracts are common symptoms that can be severely problematic for enterprises seeking to maximise the benefits of AI.

Cost and lock-in

Network egress, replication, and bulk migration increase operational costs. In addition to inflated costs, moving entire datasets increases operational risk and can result in gaps in governance and compliance.

Agility and time to value

AI is a developing technology, and so any enterprise owner who hopes to leverage the best from it must be able to act quickly. This means responding to the latest changes to stay ahead of the curve.

Data gravity presents a challenge, as it hinders adoption cycles and experimentation. Teams struggle to respond to new requirements and to operationalise models at the pace the business expects.

Enterprises can find themselves losing ground to their competitors, simply because massive amounts of data gravity are reducing response times and decelerating adoption.

Platform lock-in and innovation risk

One of the most exciting aspects of AI is the free-flowing nature of the technology, but the concentration of large datasets works against this, centralising power and limiting choice.

Enterprises face switching costs and reduced optionality when core data is tied to a single platform. It becomes almost impossible to break the status quo, and development suffers as a result.

How to reduce data gravity in AI

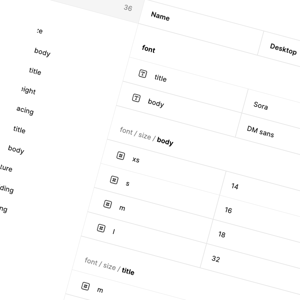

To overcome the problem of data gravity in AI, it's important to utilise four levers. These are:

- Placement

- Movement

- Format

- Control

The goal here is to bring computing to the data itself, achieving better proximity between the two. This will minimise unnecessary movement, optimise storage, and achieve strong governance.

The following best practices can also help you overcome data gravity issues in enterprise AI.

Bringing computing to the data

Place training, fine-tuning, and serving in the region where the data already resides. Co-locate feature stores and vector indices with source systems. Use edge computing close to where data is created or stored. This should minimise latency and help to improve agility.

Modularising data storage

Design domain data products with clear contracts and ownership. Publish only the data each use case needs and avoid uncontrolled copies. This reduces competition for data, without fragmenting the source of truth.

This way, there's no threat to the integrity of any of the datasets involved, and network congestion is decreased because all services and applications aren't competing with one another for the same dataset.

Optimising data storage

Reduce size and access time with deduplication and tiering of data across cold, warm, and hot categories. Use columnar and table formats that support partitioning to ensure that storage remains efficient and to maintain lineage.

Conclusion

Data gravity is a material constraint on AI programmes. However, its impact can be reduced with the optimal architecture. By adopting the right approach, the door is still open for innovation and agile development in this space.

Contact us to explore how AI can transform your digital products.