Agentic AI is moving swiftly from proof of concept to full-scale production. This is good news for enterprise leaders, as this type of AI promises to deliver strong productivity gains and a more efficient mode of operation.

But many agentic AI projects are stalling. They fall victim to weak orchestration, missing controls, or unclear economic goals and benefits. This problem is so serious that Gartner expects more than 40 per cent of agentic initiatives will be cancelled by 2027.

With this in mind, leaders need to approach agentic AI in a new way. Rather than treating this AI as a chat feature, it needs to become a new application tier.

This means leaders need to build agentic AI around a clear operating model and pick the right orchestration layer. For leaders considering self-hosting, this should only be considered when there is a clear benefit to this method of hosting.

What do we mean by agentic AI?

Agentic AI is artificial intelligence that plans, decides and takes actions independently. It bases its decisions on specific goals and the latest data, and it enacts tasks using its own toolset.

As a workflow actor, the AI agent will contact APIs, query datasets, write to systems and coordinate with a network of other agents or human users.

Why does this matter now?

This matters for enterprise leaders simply because AI agents are now a practical option in ordinary software. Recent platform updates have made this happen.

Enhancements like agent building blocks for OpenAI mean that agents can control GUIs securely, while computer-use features in Claude have paved the way for agent-friendly models like Sonnet 4.5. All in sandboxed environments.

To put it simply, it matters because enterprise leaders have more access to AI agents than ever before.

The enterprise agent stack

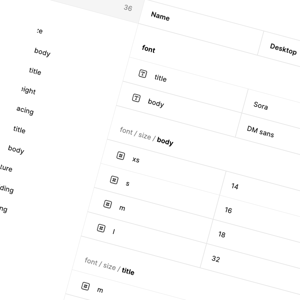

The ideal enterprise agent stack is built upon five layers. For enterprise leaders, this means in-house teams can oversee and take control of the entire architecture end-to-end.

Layer #1: Model fabric

At the model fabric layer, utilise a mix of API models to give your project breadth and to enable you to match the pace of change. Using selected open‑weight models will also provide you with additional control and customisation as required. Options include OpenAI's GPT-OSS models for research and lightweight deployment, Meta's Llama 3.1 open‑weights and Mistral models. Be sure to review your contract and data use terms regularly.

Layer #2: Orchestration layer

This is the control plane for long-running, stateful agents, supporting retries, memory, approvals, and human-in-the-loop operations.

Tech stack options at this level include LangGraph to bring stateful graphs and robust control to agent workflows, and Microsoft Agent Framework (public preview) alongside Azure AI Foundry's tracing and approvals.

Other options include CrewAI and AutoGen, which provide multi‑agent patterns that many teams use to prototype or ship production workflows.

Layer #3: Tooling and connectors

Agents require standardised methods for calling tools and accessing data. The Model Context Protocol (MCP) has emerged as an open standard, ideal for connecting assistants to systems. Treat MCP like a USB‑C for AI connectivity, applying the usual security due diligence, including allow-lists and per-tool scopes.

Layer #4: Runtime and guardrails

Production agents require safety filters, audit trails, rate limits, identity, secrets and network boundaries. Managed options can remove some of the workload associated with this.

Consider managed services like Amazon Bedrock Guardrails, Google Vertex AI Agent Builder and Agent Engine and Azure AI Foundry's monitoring and approvals.

Layer #5: Observability and evaluation

You will need a reliable record for every tool call and every step in your plan, as well as all your task‑level KPIs.

Pair live tasks with synthetic tests and keep a test set that reflects your business. Use public agent and coding evaluations, such as SWE‑bench Live, as a pattern evaluation, and ensure that this evaluation remains continuous and ongoing.

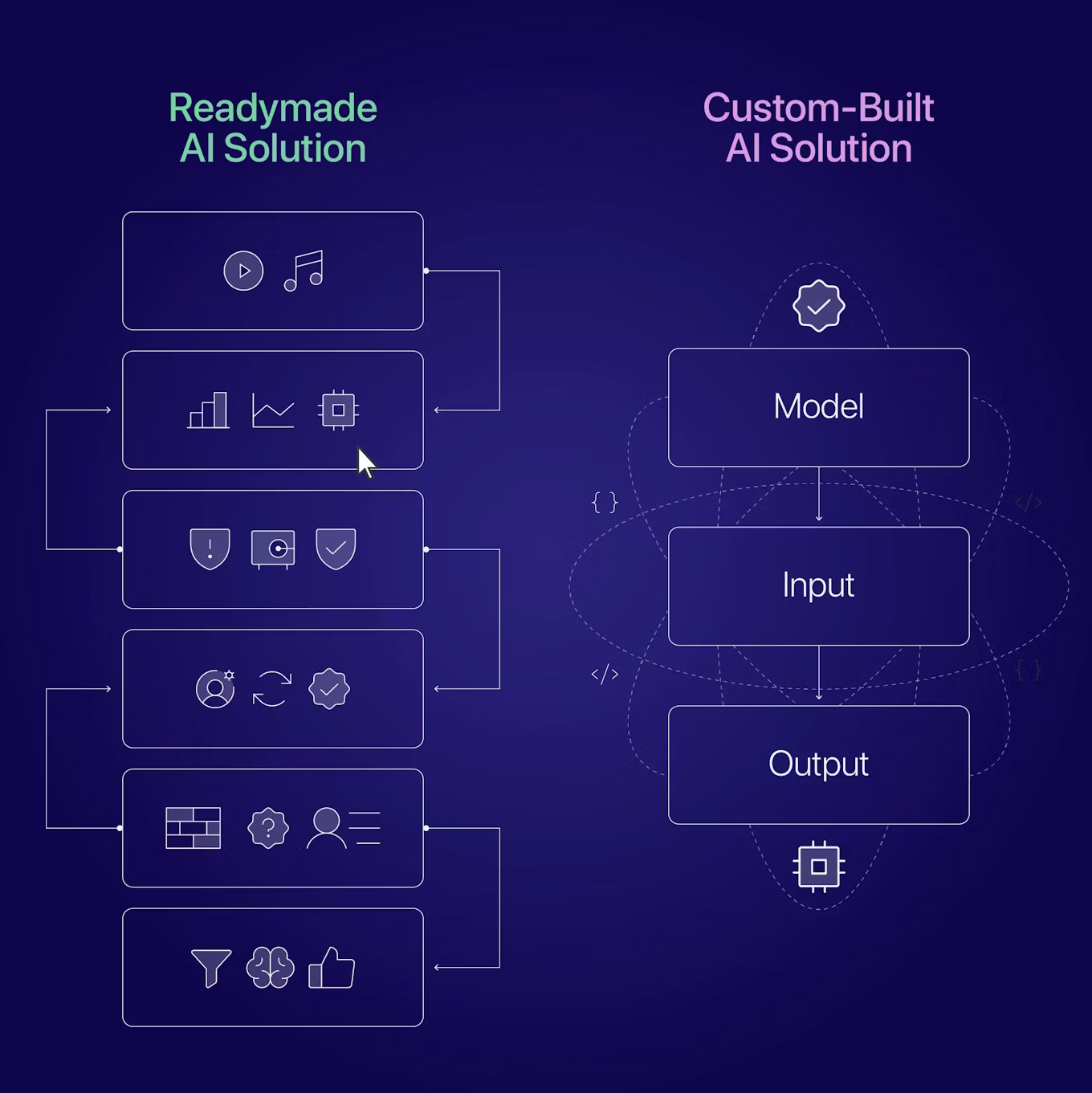

Build or buy? Making the right choice for your enterprise

Buying a managed agent platform is ideal if you need standardised guardrails and easy auditability with minimal setup. Consider this option if your data is mainly situated in a single cloud structure and you need console-level approvals and reviews.

Building on an orchestration SDK is the better option if you need fine-grained control and multi-agent patterns. It's also the right choice if you are already running projects on your own infrastructure. LangGraph, Microsoft Agent Framework, AutoGen and CrewAI are the common choices.

Only choose self-hosting models if your budget and operational policies really demand it. When you assess your budget, be sure to take into account hardware, energy and utilisation costs to gain a complete picture. Many firms still find API pricing competitive at typical volumes, while open weights make sense for high, steady usage or data-sovereign cases.

Cost and hosting: A simple rule of thumb

To keep your hosting costs manageable, use API models at the beginning of the project as you demonstrate your project's value and learn your workload shape.

Build a TCO sheet using NVIDIA's method that accounts for utilisation and energy if you later consider self‑hosting. Move to self‑hosted or mixed setups once you have stable, heavy, predictable workloads, strict data residency needs or you require custom fine‑tuning and very low latency at the edge.

Governance issues to prepare for

You'll need to prepare your agentic AI project for a number of different governance standards and requirements.

- EU AI Act: Obligations for general‑purpose models apply from 2 August 2025. The voluntary Code of Practice is available as a pathway to show compliance.

- NIST AI Risk Management Framework: The Generative AI Profile is a practical checklist many security teams follow. Use it to structure controls across design, development, deployment and operations.

- UK expectations: The ICO's guidance on AI and data protection is widely referenced by boards and auditors in the UK.

- Data use posture: Vendor data use policies are subject to change. Anthropic has updated the default training data used for consumer accounts, with different terms for enterprise contracts. Make sure the account class matches the workload sensitivity.

Architectural structure: An example for your teams

Use the following architectural structure as a reference template while you build an architecture that suits your own specific needs.

- Ingress: These are the channels where work starts, such as email, tickets, chat, CRM cases and ETL events.

- Plan and state: Here, you'll need an orchestration graph that breaks your goals down into steps. LangGraph or Microsoft Agent Framework are useful options here.

- Policy and guardrails: Apply PII filters, topic rules, red team prompts and signature checks. Use managed guardrails if you are on AWS, and cloud‑native controls for simplified audits.

- Tools and data: Apply standard connectors via the Model Context Protocol to systems of record, data warehouses and document stores. Use allow‑lists and per‑tool scopes.

- Execution: Use APIs to enable widespread execution from agents. If APIs are not available, use an agentic model designed to use specific tools and computer interfaces.

- Observability: Trace every action, set approval checkpoints and monitor cost and latency. Azure AI Foundry and other suites provide you with dashboards if you prefer a self-hosted model.

Operating model: How to keep agents useful and safe

As you develop your enterprise agentic AI project, you'll need to ensure usefulness and safety. Follow this operating model to achieve this:

1. Define done for each workflow

Write explicit success criteria per workflow, for example, updated record, posted invoice or reconciled payment. Instrument it.

2. Measure the right KPIs

Keep an eye on your main enterprise KPIs. For example:

- First pass yield per workflow

- Tool success rate and fallback rate

- Human‑in‑the‑loop rate and time to escalate

- Cost per successful task and tokens per tool call

- Data access exceptions per 1,000 runs

3. Evaluate continuously

Blend business‑specific test sets with public evaluations. Use SWE‑bench Live as a guide for keeping tasks fresh.

4. Human approvals where risk is real

Configure gated steps for spend, personal data or external communications. Treat each approval as a learning signal, not just a block.

5. Change control

Agents change when models, prompts or tools change. Use semantic versioning, shadow runs and canary rollouts. Keep a rollback plan.

Vendor landscape at a glance

Consider these solutions for your project. Many of these have already been covered above.

- Open orchestration SDKs: Orchestration SDK options include LangGraph, Microsoft Agent Framework, AutoGen and CrewAI

- Cloud agent platforms: For cloud agent platforms, consider Amazon Bedrock plus Guardrails, Google Vertex AI Agent Builder and Azure AI Foundry agent services

- Connectivity standard: Model Context Protocol for safer, repeatable tool and data access.

A 90‑day plan that de‑risks the investment

The right timeline provides measurable increments that help you assess your progress. It also helps you ensure your project remains on track. Utilise this 90-day plan in your own agentic AI project.

Days 1 to 15

Pick two high‑value workflows with measurable outcomes and modest regulatory risk. Instrument the current baseline. Shortlist orchestration options and a guardrails approach. Confirm account classes and data‑use settings with each vendor.

Days 16 to 45

Build the orchestration graph with human approvals at the right steps. Connect tools using MCP where possible. Stand up tracing and cost dashboards.

Days 46 to 75

Run side‑by‑side pilots with a control group. Track first pass yield, time to complete, tool success rates, cost per task and escalation rate.

Days 76 to 90

Decide go or no‑go. If go, define SLOs and an on‑call model for agent incidents. Prepare your board update with value delivered, unit costs and risk posture against NIST AI RMF and EU AI Act expectations.

Final word

Agentic AI can be a valued and accountable assistant in production, provided that you treat it like a complete system. Make sure you choose an orchestration core that aligns with your enterprise's operating capabilities, and keep humans in the loop whenever you encounter risk.

Only choose a self-hosting model when the numbers make sense for your enterprise. Keep all this in mind, and ensure your agentic AI project avoids the scrap heap that Gartner warns about.